Partition function (mathematics)

The partition function or configuration integral, as used in probability theory, information science and dynamical systems, is an abstraction of the definition of a partition function in statistical mechanics. It is a special case of a normalizing constant in probability theory, for the Boltzmann distribution. The partition function occurs in many problems of probability theory because, in situations where there is a natural symmetry, its associated probability measure, the Gibbs measure, has the Markov property. This means that the partition function occurs not only in physical systems with translation symmetry, but also in such varied settings as neural networks (the Hopfield network), and applications such as genomics, corpus linguistics and artificial intelligence, which employ Markov networks, and Markov logic networks. The Gibbs measure is also the unique measure that has the property of maximizing the entropy for a fixed expectation value of the energy; this underlies the appearance of the partition function in maximum entropy methods and the algorithms derived therefrom.

Contents |

Definition

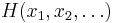

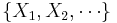

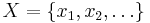

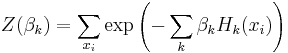

Given a set of random variables  taking on values

taking on values  , and some sort of potential function or Hamiltonian

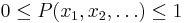

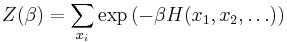

, and some sort of potential function or Hamiltonian  , the partition function is defined as

, the partition function is defined as

The function H is understood to be a real-valued function on the space of states  , while

, while  is a real-valued free parameter (conventionally, the inverse temperature). The sum over the

is a real-valued free parameter (conventionally, the inverse temperature). The sum over the  is understood to be a sum over all possible values that the random variable

is understood to be a sum over all possible values that the random variable  may take. Thus, the sum is to be replaced by an integral when the

may take. Thus, the sum is to be replaced by an integral when the  are continuous, rather than discrete. Thus, one writes

are continuous, rather than discrete. Thus, one writes

for the case of continuously-varying  .

.

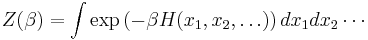

The number of variables  need not be countable, in which case the sums are to be replaced by functional integrals. Although there are many notations for functional integrals, a common one would be

need not be countable, in which case the sums are to be replaced by functional integrals. Although there are many notations for functional integrals, a common one would be

Such is the case for the partition function in quantum field theory.

A common, useful modification to the partition function is to introduce auxiliary functions. This allows, for example, the partition function to be used as a generating function for correlation functions. This is discussed in greater detail below.

The parameter β

The role or meaning of the parameter  is best understood by examining the derivation of the partition function with maximum entropy methods. Here, the parameter appears as a Lagrange multiplier; the multiplier is used to guarantee that the expectation value of some quantity is preserved by the distribution of probabilities. Thus, in physics problems, the use of just one parameter

is best understood by examining the derivation of the partition function with maximum entropy methods. Here, the parameter appears as a Lagrange multiplier; the multiplier is used to guarantee that the expectation value of some quantity is preserved by the distribution of probabilities. Thus, in physics problems, the use of just one parameter  reflects the fact that there is only one expectation value that must be held constant: this is the energy. For the grand canonical ensemble, there are two Lagrange multipliers: one to hold the energy constant, and another (the fugacity) to hold the particle count constant. In the general case, there are a set of parameters taking the place of

reflects the fact that there is only one expectation value that must be held constant: this is the energy. For the grand canonical ensemble, there are two Lagrange multipliers: one to hold the energy constant, and another (the fugacity) to hold the particle count constant. In the general case, there are a set of parameters taking the place of  , one for each constraint enforced by the multiplier. Thus, for the general case, one has

, one for each constraint enforced by the multiplier. Thus, for the general case, one has

The corresponding Gibbs measure then provides a probability distribution such that the expectation value of each  is a fixed value.

is a fixed value.

Although the value of  is commonly taken to be real, it need not be, in general; this is discussed in the section Normalization below.

is commonly taken to be real, it need not be, in general; this is discussed in the section Normalization below.

Symmetry

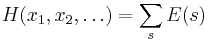

The potential function itself commonly takes the form of a sum:

where the sum over s is a sum over some subset of the power set P(X) of the set  . For example, in statistical mechanics, such as the Ising model, the sum is over pairs of nearest neighbors. In probability theory, such as Markov networks, the sum might be over the cliques of a graph; so, for the Ising model and other lattice models, the maximal cliques are edges.

. For example, in statistical mechanics, such as the Ising model, the sum is over pairs of nearest neighbors. In probability theory, such as Markov networks, the sum might be over the cliques of a graph; so, for the Ising model and other lattice models, the maximal cliques are edges.

The fact that the potential function can be written as a sum usually reflects the fact that it is invariant under the action of a group symmetry, such as translational invariance. Such symmetries can be discrete or continuous; they materialize in the correlation functions for the random variables (discussed below). Thus a symmetry in the Hamiltonian becomes a symmetry of the correlation function (and vice-versa).

This symmetry has a critically important interpretation in probability theory: it implies that the Gibbs measure has the Markov property; that is, it is independent of the random variables in a certain way, or, equivalently, the measure is identical on the equivalence classes of the symmetry. This leads to the widespread appearance of the partition function in problems with the Markov property, such as Hopfield networks.

As a measure

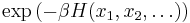

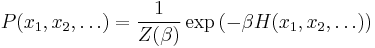

The value of the expression

can be interpreted as a likelihood that a specific configuration of values  occurs in the system. Thus, given a specific configuration

occurs in the system. Thus, given a specific configuration  ,

,

is the probability of the configuration  occurring in the system, which is now properly normalized so that

occurring in the system, which is now properly normalized so that  , and such that the sum over all configurations totals to one. As such, the partition function can be understood to provide a measure on the space of states; it is sometimes called the Gibbs measure. More narrowly, it is called the canonical ensemble in statistical mechanics.

, and such that the sum over all configurations totals to one. As such, the partition function can be understood to provide a measure on the space of states; it is sometimes called the Gibbs measure. More narrowly, it is called the canonical ensemble in statistical mechanics.

There exists at least one configuration  for which the probability is maximized; this configuration is conventionally called the ground state. If the configuration is unique, the ground state is said to be non-degenerate, and the system is said to be ergodic; otherwise the ground state is degenerate. The ground state may or may not commute with the generators of the symmetry; if commutes, it is said to be an invariant measure. When it does not commute, the symmetry is said to be spontaneously broken.

for which the probability is maximized; this configuration is conventionally called the ground state. If the configuration is unique, the ground state is said to be non-degenerate, and the system is said to be ergodic; otherwise the ground state is degenerate. The ground state may or may not commute with the generators of the symmetry; if commutes, it is said to be an invariant measure. When it does not commute, the symmetry is said to be spontaneously broken.

Conditions under which a ground state exists and is unique are given by the Karush–Kuhn–Tucker conditions; these conditions are commonly used to justify the use of the Gibbs measure in maximum-entropy problems.

Normalization

The values taken by  depend on the mathematical space over which the random field varies. Thus, real-valued random fields take values on a simplex: this the geometrical way of saying that the sum of probabilities must total to one. For quantum mechanics, the random variables ranges over complex projective space (or complex-valued Hilbert space), because the random variables are interpreted as probability amplitudes. The emphasis here is on the word projective, as the amplitudes are still normalized to one. The normalization for the potential function is the Jacobian for the appropriate mathematical space: it is 1 for ordinary probabilities, and i for complex Hilbert space; thus, in quantum field theory, one sees

depend on the mathematical space over which the random field varies. Thus, real-valued random fields take values on a simplex: this the geometrical way of saying that the sum of probabilities must total to one. For quantum mechanics, the random variables ranges over complex projective space (or complex-valued Hilbert space), because the random variables are interpreted as probability amplitudes. The emphasis here is on the word projective, as the amplitudes are still normalized to one. The normalization for the potential function is the Jacobian for the appropriate mathematical space: it is 1 for ordinary probabilities, and i for complex Hilbert space; thus, in quantum field theory, one sees  in the exponential, rather than

in the exponential, rather than  .

.

Expectation values

The partition function is commonly used as a generating function for expectation values of various functions of the random variables. So, for example, taking  as an adjustable parameter, then the derivative of

as an adjustable parameter, then the derivative of  with respect to

with respect to

gives the average (expectation value) of H. In physics, this would be called the average energy of the system.

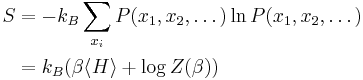

The entropy is given by

The Gibbs measure is the unique statistical distribution that maximizes the entropy for a fixed expectation value of the energy; this underlies its use in maximum entropy methods.

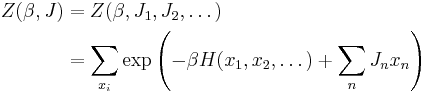

By introducing artificial auxiliary functions  into the partition function, it can then be used to obtain the expectation value of the random variables. Thus, for example, by writing

into the partition function, it can then be used to obtain the expectation value of the random variables. Thus, for example, by writing

one then has

as the expectation value of  .

.

Correlation functions

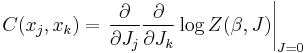

Multiple differentiations lead to the correlation functions of the random variables. Thus the correlation function  between variables

between variables  and

and  is given by:

is given by:

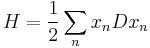

For the case where H can be written as a quadratic form involving a differential operator, that is, as

then the correlation function  can be understood to be the Green's function for the differential operator (and generally giving rise to Fredholm theory).

can be understood to be the Green's function for the differential operator (and generally giving rise to Fredholm theory).

General properties

Partition functions often show critical scaling, universality and are subject to the renormalization group.

![Z = \int \mathcal{D} \phi \exp \left(- \beta H[\phi] \right)](/2012-wikipedia_en_all_nopic_01_2012/I/a754ce2156da0ed081f4e1efaea74091.png)

![\bold{E}[H] = \langle H \rangle = -\frac {\partial \log(Z(\beta))} {\partial \beta}](/2012-wikipedia_en_all_nopic_01_2012/I/b55bb1966e78795df14e170515f3822d.png)

![\bold{E}[x_k] = \langle x_k \rangle = \left.

\frac{\partial}{\partial J_k}

\log Z(\beta,J)\right|_{J=0}](/2012-wikipedia_en_all_nopic_01_2012/I/6901329023a9b52d744390748c6053d4.png)